суббота, 10 мая 2008 г.

Artificial Neural Networks/Activation Functions

There are a number of common activation functions in use with neural networks. This is not an exhaustive list.

[edit] Step Function

A step function is a function like that used by the original Perceptron. The output is a certain value, A1, if the input sum is above a certain threshold and A0 if the input sum is below a certain threshold. The values used by the Perceptron were A1 = 1 and A0 = 0.

These kinds of step activation functions are useful for binary classification schemes. In other words, when we want to classify an input pattern into one of two groups, we can use a binary classifier with a step activation function. Another use for this would be to create a set of small feature identifiers. Each identifier would be a small network that would output a 1 if a particular input feature is present, and a 0 otherwise. Combining multiple feature detectors into a single network would allow a very complicated clustering or classification problem to be solved.

[edit] Linear Combination

A linear combination is where the weighted sum input of the neuron plus a linearly dependant bias becomes the system output. Specifically:

y = ζ + b

In these cases, the sign of the output is considered to be equivalent to the 1 or 0 of the step function systems, which enables the two methods be to equivalent if

θ = − b

[edit] Continuous Log-Sigmoid Function

A log-sigmoid function, also known as a logistic function, is given by the relationship:

\Pi(t) = \frac{1}{1 + e^{-\Beta t}}

Where β is a slope parameter. This is called the log-sigmoid because a sigmoid can also be constructed using the hyperbolic tangent function instead of this relation. In that case, it would be called a tan-sigmoid. Here, we will refer to the log-sigmoid as simply “sigmoid”. The sigmoid has the property of being similar to the step function, but with the addition of a region of uncertainty. Sigmoid functions in this respect are very similar to the input-output relationships of biological neurons, although not exactly the same. Below is the graph of a sigmoid function.

Sigmoid functions are also prized because their derivatives are easy to calculate, which is helpful for calculating the weight updates in certain training algorithms. The derivative is given by:

\frac{d\Pi(t)}{dt} = \Pi(t)[1 - \Pi(t)]

[edit] Continuous Tan-Sigmoid Function

[edit] Softmax Function

The softmax activation function is useful predominantly in the output layer of a clustering system. Softmax functions convert a raw value into a posterior probability. This provides a measure of certainty. The softmax activation function is given as:

y_i = \frac{e^{\zeta_i}}{\sum_{j\in L} e^{\zeta_j} }

L is the set of neurons in the output layer.

Artificial Neural Networks/History

Early History

The history of neural networking arguably started in the late 1800s with scientific attempts to study the workings of the human brain. In 1890, William James published the first work about brain activity patterns. In 1943, McCulloch and Pitts produced a model of the neuron that is still used today in artificial neural networking. This model is broken into two parts: a summation over weighted inputs and an output function of the sum.

[edit] Artificial Neural Networking

In 1949, Donald Hebb published The Organization of Behavior, which outlined a law for synaptic neuron learning. This law, later known as Hebbian Learning in honor of Donald Hebb is one of the simplest and most straight-forward learning rules for artificial neural networks.

In 1951, Marvin Minsky created the first ANN while working at Princeton.

In 1958 The Computer and the Brain was published posthumously, a year after John von Neumann’s death. In that book, von Neumann proposed many radical changes to the way in which researchers had been modeling the brain.

[edit] Mark I Perceptron

The Mark I Perceptron was also created in 1958, at Cornell University by Frank Rosenblatt. The Perceptron was an attempt to use neural network techniques for character recognition. The Mark I Perceptron was a linear system, and was useful for solving problems where the input classes were linearly separable in the input space. In 1960, Rosenblatt published the book Principles of Neurodynamics, containing much of his research and ideas about modeling the brain.

The Perceptron was a linear system with a simple input-output relationship defined as a McCulloch-Pitts neuron with a step activation function. In this model, the weighted inputs were compared to a threshold θ. The output, y, was defined as a simple step function:

Despite the early success of the Perceptron and artificial neural network research, there were many people who felt that there was limited promise in these techniques. Among these were Marvin Minsky and Seymore Papert, whose 1969 book Perceptrons was used to discredit ANN research and focus attention on the apparent limitations of ANN work. One of the limitations that Minsky and Papert pointed out most clearly was the fact that the Perceptron was not able to classify patterns that are not linearly separable in the input space. Below, the figure on the left shows an input space with a linearly separable classification problem. The figure on the right, in contrast, shows an input space where the classifications are not linearly separable.

Despite the failure of the Mark I Perceptron to handle non-linearly separable data, it was not an inherent failure of the technology, but a matter of scale. The Mark I was a two layer Perceptron, Hecht-Nielsen showed in 1990 that a three layer machine (multi layer Perceptron, or MLP) was capable of solving nonlinear separation problems. Perceptrons ushered in what some call the “quiet years”, where ANN research was at a minimum of interest. It wasn’t until the rediscovery of the backpropagation algorithm in 1986 that the field gained widespread interest again.

[edit] Backpropagation and Rebirth

The backpropagation algorithm, originally discovered by Werbos in 1974 was rediscovered in 1986 with the book Learning Internal Representation by Error Propagation by Rumelhart, Hinton and Williams. Backpropagation is a form of the gradient descent algorithm used with artificial neural networks for minimization and curve-fitting.

In 1987 the IEEE annual international ANN conference was started for ANN researchers. In 1987 the International Neural Network Society (INNS) was formed, along with the INNS Neural Networking journal in 1988.

Biological Neural Nets

In the case of a biological neural net, neurons are living cells with axons and dendrites that form interconnections through electro-chemical synapses. Signals are transmitted through the cell body (soma), from the dendrite to the axon as an electrical impulse. In the pre-synaptic membrane of the axon, the electrical signal is converted into a chemical signal in the form of various neurotransmitters. These neurotransmitters, along with other chemicals present in the synapse form the message that is received by the post-synaptic membrane of the dendrite of the next cell, which in turn is converted to an electrical signal.

This page is going to provide a brief overview of biological neural networks, but the reader will have to find a better source for a more in-depth coverage of the subject.

[edit] Synapses

The figure above shows a model of the synapse showing the chemical messages of the synapse moving from the axon to the dendrite. Synapses are not simply a transmission medium for chemical signals, however. A synapse is capable of modifying itself based on the signal traffic that it receives. In this way, a synapse is able to “learn” from its past activity. This learning happens through the strengthening or weakening of the connection. External factors can also affect the chemical properties of the synapse, including body chemistry and medication.

[edit] Neurons

Cells have multiple dendrites, each receives a weighted input. Inputs are weighted by the strength of the synapse that the signal travels through. The total input to the cell is the sum of all such synaptic weighted inputs. Neurons utilize a threshold mechanism, so that signals below a certain threshold are ignored, but signals above the threshold cause the neuron to fire. Neurons follow an “all or nothing” firing scheme, and are similar in this respect to a digital component. Once a neuron has fired, a certain refraction period must pass before it can fire again.

[edit] Biological Networks

Biological neural systems are heterogeneous, in that there are many different types of cells with different characteristics. Biological systems are also characterized by macroscopic order, but nearly random interconnection on the microscopic layer. The random interconnection at the cellular level is rendered into a computational tool by the learning process of the synapse, and the formation of new synapses between nearby neurons.

Artificial Neural Networks

Artificial Neural Networks, also known as “Artificial neural nets”, “neural nets”, or ANN for short, are a computational tool modeled on the interconnection of the neuron in the nervous systems of the human brain and that of other organisms. Biological Neural Nets (BNN) are the naturally occurring equivalent of the ANN. Both BNN and ANN are network systems constructed from atomic components known as “neurons”. Artificial neural networks are very different from biological networks, although many of the concepts and characteristics of biological systems are faithfully reproduced in the artificial systems. Artificial neural nets are a type of non-linear processing system that is ideally suited for a wide range of tasks, especially tasks where there is no existing algorithm for task completion. ANN can be trained to solve certain problems using a teaching method and sample data. In this way, identically constructed ANN can be used to perform different tasks depending on the training received. With proper training, ANN are capable of generalization, the ability to recognize similarities among different input patterns, especially patterns that have been corrupted by noise.

[edit] What Are Neural Nets?

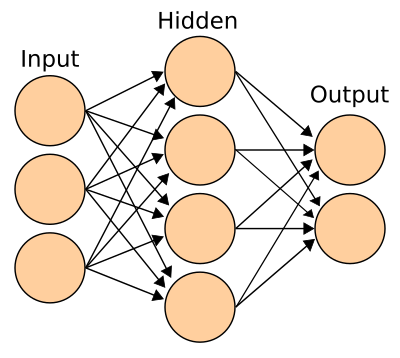

The term “Neural Net” refers to both the biological and artificial variants, although typically the term is used to refer to artificial systems only. Mathematically, neural nets are nonlinear. Each layer represents a non-linear combination of non-linear functions from the previous layer. Each neuron is a multiple-input, multiple-output (MIMO) system that receives signals from the inputs, produces a resultant signal, and transmits that signal to all outputs. Practically, neurons in an ANN are arranged into layers. The first layer that interacts with the environment to receive input is known as the input layer. The final layer that interacts with the output to present the processed data is known as the output layer. Layers between the input and the output layer that do not have any interaction with the environment are known as hidden layers. Increasing the complexity of an ANN, and thus its computational capacity, requires the addition of more hidden layers, and more neurons per layer..

Biological neurons are connected in very complicated networks. Some regions of the human brain such as the cerebellum are composed of very regular patterns of neurons. Other regions of the brain, such as the cerebrum have less regular arrangements. A typical biological neural system has millions or billions of cells, each with thousands of interconnections with other neurons. Current artificial systems cannot achieve this level of complexity, and so cannot be used to reproduce the behavior of biological systems exactly.

[edit] Processing Elements

In an artificial neural network, neurons can take many forms and are typically referred to as Processing Elements (PE) to differentiate them from the biological equivalents. The PE are connected into a particular network pattern, with different patterns serving different functional purposes. Unlike biological neurons with chemical interconnections, the PE in artificial systems are electrical only, and may be either analog, digital, or a hybrid. However, to reproduce the effect of the synapse, the connections between PE are assigned multiplicative weights, which can be calibrated or “trained” to produce the proper system output.

[edit] McCulloch-Pitts Model

Processing Elements are typically defined in terms of two equations that represent the McCulloch-Pitts model of a neuron:

[McCulloch-Pitts Model]

| ζ = | ∑ | wixi |

| i |

- y = σ(ζ)

Where ζ is the weighted sum of the inputs (the inner product of the input vector and the tap-weight vector), and σ(ζ) is a function of the weighted sum. If we recognize that the weight and input elements form vectors w and x, the ζ weighted sum becomes a simple dot product:

This may be called either the activation function (in the case of a threshold comparison) or a transfer function. The image to the right shows this relationship diagrammatically. The dotted line in the center of the neuron represents the division between the calculation of the input sum using the weight vector, and the calculation of the output value using the activation function. In an actual artificial neuron, this division may not be made explicitly.

The inputs to the network, x, come from an input space and the system outputs are part of the output space. For some networks, the output space Y may be as simple as {0, 1}, or it may be a complex multi-dimensional space. Neural networks tend to have one input per degree of freedom in the input space, and one output per degree of freedom in the output space.

The tap weight vector is updated during training by various algorithms. One of the more popular of which is the backpropagation algorithm which we will discuss in more detail later.

[edit] Why Use Neural Nets?

Artificial neural nets have a number of properties that make them an attractive alternative to traditional problem-solving techniques. The two main alternatives to using neural nets are to develop an algorithmic solution, and to use an expert system.

Algorithmic methods arise when there is sufficient information about the data and the underlying theory. By understanding the data and the theoretical relationship between the data, we can directly calculate unknown solutions from the problem space. Ordinary von Neumann computers can be used to calculate these relationships quickly and efficiently from a numerical algorithm.

Expert systems, by contrast, are used in situations where there is insufficient data and theoretical background to create any kind of a reliable problem model. In these cases, the knowledge and rationale of human experts is codified into an expert system. Expert systems emulate the deduction processes of a human expert, by collecting information and traversing the solution space in a directed manner. Expert systems are typically able to perform very well in the absence of an accurate problem model and complete data. However, where sufficient data or an algorithmic solution is available, expert systems are a less then ideal choice.

Artificial neural nets are useful for situations where there is an abundance of data, but little underlying theory. The data, which typically arises through extensive experimentation may be non-linear, non-stationary, or chaotic, and so may not be easily modeled. Input-output spaces may be so complex that a reasonable traversal with an expert system is not a satisfactory option. Importantly, neural nets do not require any a priori assumptions about the problem space, not even information about statistical distribution. Though such assumptions are not required, it has been found that the addition of such a priori information as the statistical distribution of the input space can help to speed training. Many mathematical problem models tend to assume that data lies in a standard distribution pattern, such as Gaussian or Maxwell-Boltzmann distributions. Neural networks require no such assumption. During training, the neural network performs the necessary analytical work, which would require non-trivial effort on the part of the analyst if other methods were to be used.

[edit] Learning

Learning is a fundamental component to an intelligent system, although a precise definition of learning is hard to produce. In terms of an artificial neural network, learning typically happens during a specific training phase. Once the network has been trained, it enters a production phase where it produces results independently. Training can take on many different forms, using a combination of learning paradigms, learning rules, and learning algorithms. A system which has distinct learning and production phases is known as a static network. Networks which are able to continue learning during production use are known as dynamical systems.

A learning paradigm is supervised, unsupervised or a hybrid of the two, and reflects the method in which training data is presented to the neural network. A method that combines supervised and unsupervised training is known as a hybrid method. A learning rule is a model for the types of methods to be used to train the system, and also a goal for what types of results are to be produced. The learning algorithm is the specific mathematical method that is used to update the inter-neuronal synaptic weights during each training iteration. Under each learning rule, there are a variety of possible learning algorithms for use. Most algorithms can only be used with a single learning rule. Learning rules and learning algorithms can typically be used with either supervised or unsupervised learning paradigms, however, and each will produce a different effect.

Overtraining is a problem that arises when too many training examples are provided, and the system becomes incapable of useful generalization. This can also occur when there are too many neurons in the network and the capacity for computation exceeds the dimensionality of the input space. During training, care must be taken not to provide too many input examples and different numbers of training examples could produce very different results in the quality and robustness of the network.

[edit] Network Parameters

There are a number of different parameters that must be decided upon when designing a neural network. Among these parameters are the number of layers, the number of neurons per layer, the number of training iterations, et cetera. Some of the more important parameters in terms of training and network capacity are the number of hidden neurons, the learning rate and the momentum parameter.

[edit] Number of Hidden Neurons

Hidden neurons are the neurons that are neither in the input layer nor the output layer. These neurons are essentially hidden from view, and their number and organization can typically be treated as a black box to people who are interfacing with the system. Using additional layers of hidden neurons enables greater processing power and system flexibility. This additional flexibility comes at the cost of additional complexity in the training algorithm. Having too many hidden neurons is analogous to a system of equations with more equations then there are free variables: the system is over specified, and is incapable of generalization. Having too few hidden neurons, conversely, can prevent the system from properly fitting the input data, and reduces the robustness of the system.